Type Theory

Our Core type theory, called "Quantitative Type Theory" ("QTT"), is derived from the paper I Got Plenty o’ Nuttin’. Modifications and extensions are documented here.

Extensions/Annotations

Core works off a system of annotations/extensions. These currently are crucial for understanding what happens to the data structures in Core.

More can be read about our extensible system here.

HR

HR is the first step into core from the frontend language. This phase is a lot like IR (Read below), however it includes names rather than De Brujin indices. Due to this, this phase is rather short lived.

Characteristics of HR

Is an Extension of HR with Names

Short Lived

IR

IR is the representation of Core in which all other extensions are

based off of. In order to facilitate fast evaluation Core is broken up

into terms and values. Values are like terms but they have no

β-redexs via type construction. Thus they statically rule out λ being

applied to arguments. This means values are in normal form.

Characteristics of IR

De Brujin indices

Extensions via Extensible

Idea of terms and values

Values have no β-redexs by construction

Free name can still be there, and could be inlined

Impossible to reduce if the Free names are a data type function

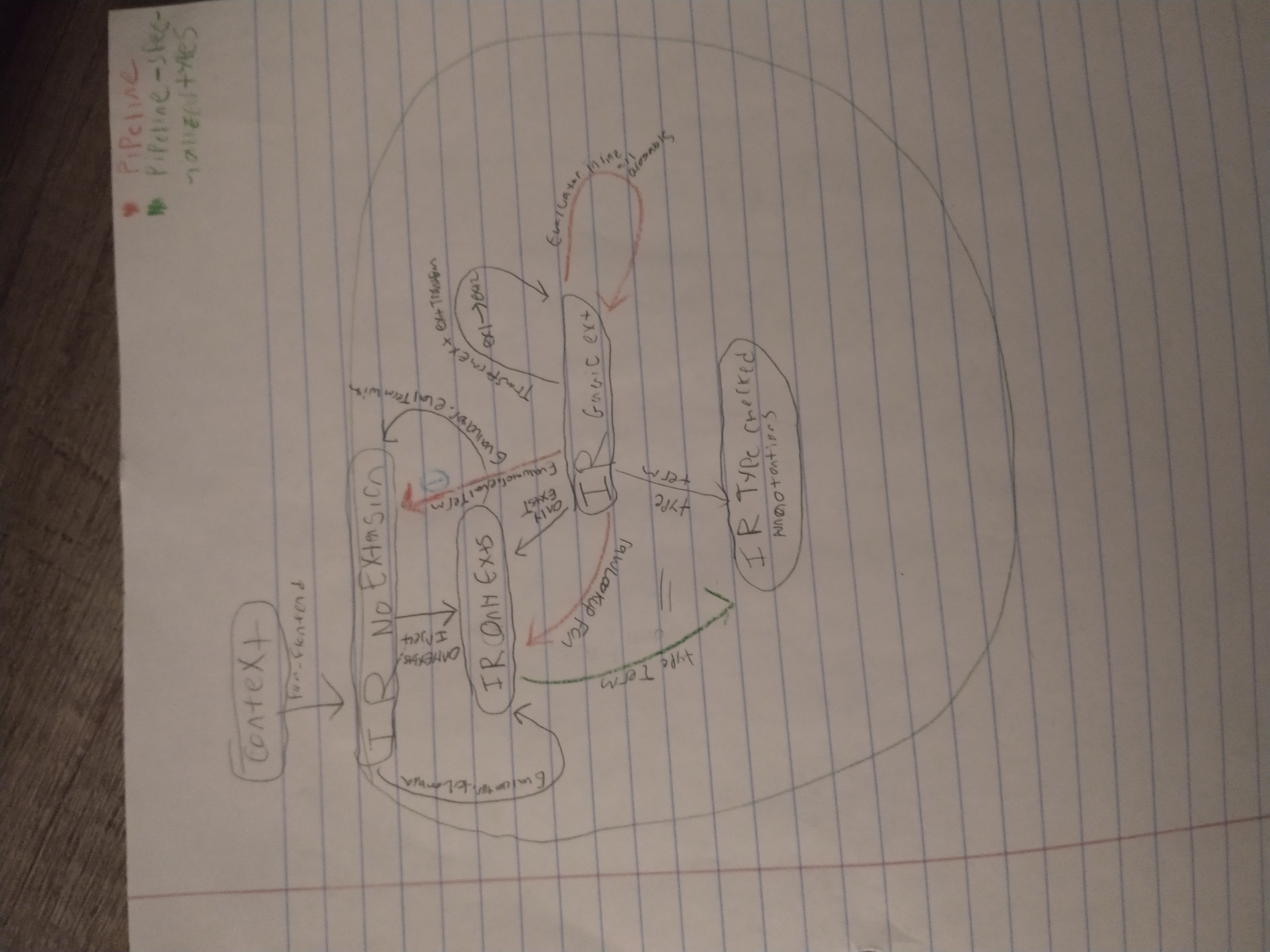

Extension Layout and mapping

In this diagram we can see various mappings that happen through the extensions of core.

Main representation

Type Checker

Type checking is the first phase of IR. This phase annotates every node with its type and usage. To facilitate fast type checking every type annotated is a fully evaluated value. Other phases like the unifier use this concept as well.

Erased

After the code gets pass through IR, we then want to erase all the

type annotations we've built up. To do this, we send the code through

the Erased representation. Unlike HR, Erased is not an extension of

IR.

Erased also erases all forms which have 0 usages. Since usages are not first class yet, this is a rather simple.

Another key characteristic of Erased is that types are separate from Terms as they are currently assumed to have 0 usages. Thus types are always erased and separated out.

This may change with time.

ErasedAnn

This is the backend specific form that Michelson uses.